Hi,

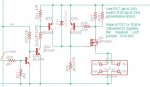

Would it be safer / easier if I drove each LED via one of these ........

Those two supplies have a "mains" (line) a.c. input, but the OP implied a 36 v d.c. input ? Is this a "mobile" (e.g. battery-powered) application, or something like an aquarium? Note in the graph on page 9 of the Data Sheet that the LEDs need about 40 volts at 25 degrees C for 1 Amp, which falls to about 38 volts when they "warm up" to 105 degrees.

You should be able to estimate the heating because more than 50% of the electrical energy will (still) be converted to heat. My first Google for "best LED efficiency" found

THIS LINK which includes the following (and then suggests even greater losses in practice):

"While energy conversion efficiency of incandescent lamps, for example, is between 10 % and 20 %, very efficient LEDs at present achieve values between 40 % and 50 %. Nevertheless, this is still only 40 – 50 %, so 50 % to 60 % of the power is lost as heat."

Thermal calculations are generally quite similar to Ohms Law (hence the term Thermal Resistance) with the Heat Flow equivalent to Current and Temperature equivalent to Voltage, but unfortunately even members on this forum don't always understand (or can use) Ohms Law.

Finally, I feel I should clarify the terms "PWM" (Pulse-Width-Modulation), "Switching Mode" or "Class D" (amplification), because there are two fundamentally different types (depending on whether an inductor is used or not). In the "simple" version (as in post #8) the LED is switched On and Off rapidly so that the

average current (and brightness) is reduced, but the "instantaneous" (or peak) current/brightness is determined by (all) the resistance(s) in series with the Supply Rail. This means that some power is "lost" in the series resistance (which might be more "inconvenient" than in the LED which can have good Heat Sinking), and the illumination may "flicker" (depending on the PWM frequency).

However "real PWM" (as I call it, because I worked on it for many years

) uses an Inductor to "store" the surplus energy (voltage) and then applies it to the load (LED) via a Recovery Diode, during the "Off" (i.e no input current) period of the PWM cycle. Thus the current in the LED is "continuous", so there should be no flicker and the LED can be brighter because the "peak" current flow may be lower. This type of PWM often operates at 100 kHz and upwards, to allow the inductor and supply (decoupling) capacitors to be much smaller. Most dc-dc converters (and some audio amplifiers now) use this principle.

Cheers, Alan.